Automatic image captions with Microsoft Azure Computer Vision API

They say a picture is worth a thousand words. But what if you could get a thousand words from a picture automatically? That would be even better, right? Sounds like science fiction, but it isn't that far-fetched. Contemporary image recognition technologies can derive surprising amounts of data from a static image without you needing to be a data scientist.

Digital imaging has come a long way and nowadays we take so many photos on our phones and cameras, that it is easy to forget that not that long time ago it was a chore to get your photos from film to digital. Sharing photos is also light years easier than it used to be.

Computers having an understanding of the content of an image has come even further than that. The most rudimentary form of it is Optical Character Recognition (OCR). This became commonplace in businesses in the 1990s. OCR recognises the shapes of letters in an image and turns them into a digital format that can be edited using a word processor.

Fast forward to today and the smartphone in your pocket can use metadata like location and time to find photos. But even more impressively, you can perform searches based on the content of those images. Both iOS and Android allow you to search your collection of images with terms like "Dogs in Paris". The operating system breaks down the query and uses a combination of metadata and machine learning to find you relevant photographs.

The results are not perfect, but it is nevertheless an impressive engineering achievement if you come to really think of it. Perhaps the best part is that to take advantage of the technology you don't need deep insight into image recognition and machine learning.

What can an API see in a photograph?

Creating a well-functioning image recognition system is not easy and requires a lot of input data. For common business uses it is not a feasible undertaking to build a complex system, but instead you can leverage API providers to get a head start on image recognition.

There are a lot of players in the imaging API field, from cloud computing giants to companies occupying a specific niche. The range of data that can be derived from images (and video) is also wide. To narrow down the scope of this article I focus on a single API and use case: Using the Microsoft Azure Computer Vision API to generate image captions.

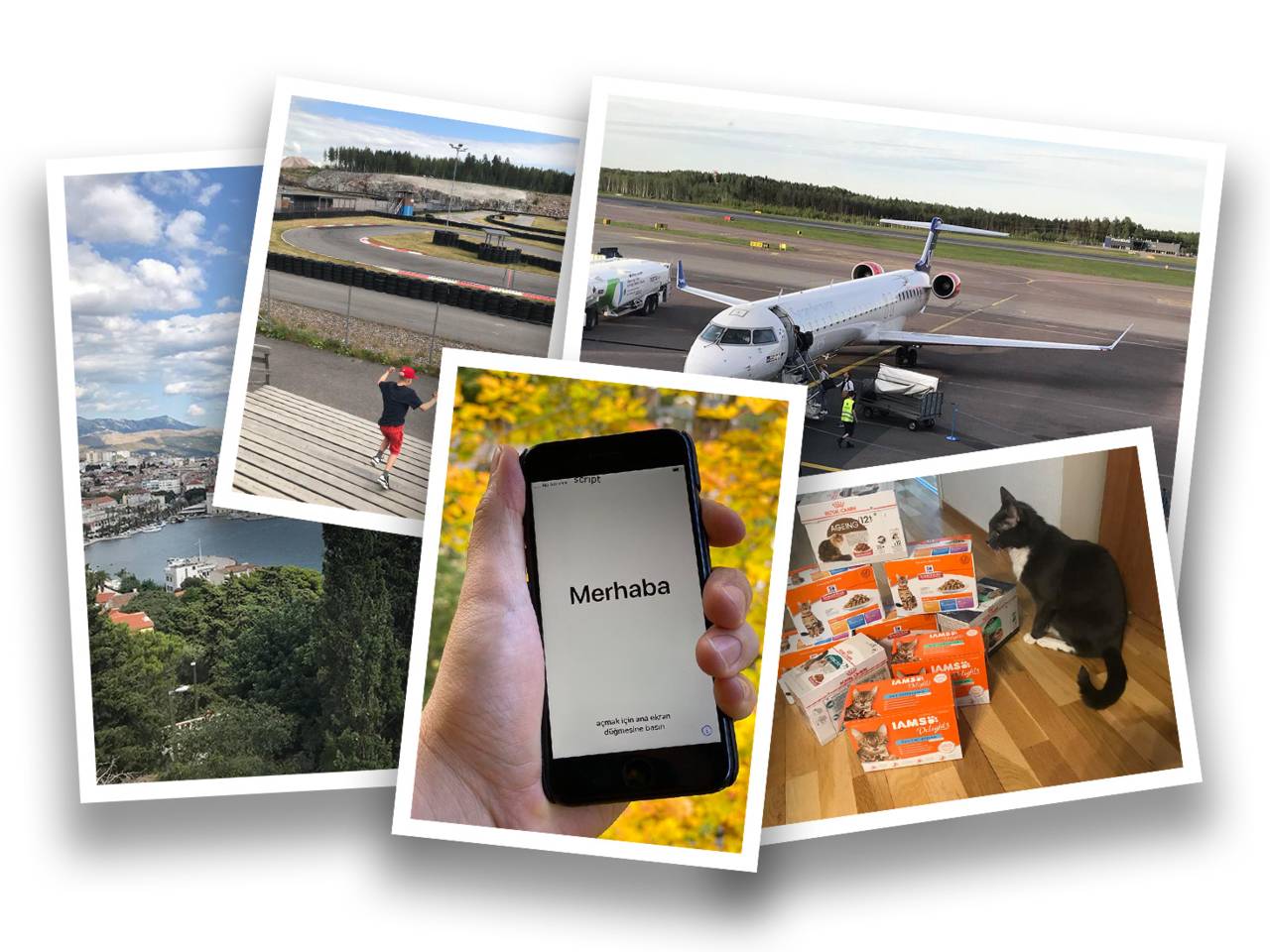

Before getting my hands dirty, I wanted to get a rough understanding just how well the API works. What are its strengths and weaknesses? What kind of results could I expect for different types of images? For this I randomly selected a total of ten images from my smartphone and fed them manually to the API. For the return value I picked the verbal description of what the system thought is in the image. The results are below.

A close up of a cell phone

A house covered in snow

A red and black motorcycle is parked on the side of a building

A large passenger jet sitting on top of a runway

A large body of water with a city in the background

A cat sitting on the floor in front of a box

A person standing next to a fence

A stove top oven sitting inside of a kitchen

A glass of beer on a table

A car driving on a city street

Needless to say, I was blown away how well the API performed on a general-purpose task such as the one I gave it. While I could nitpick on some points in general it did an excellent job at recognising the gist of the image, and even providing details for some images.

Integrating computer vision with eZ Platform

The eZ Platform content engine is built from the ground up to be extensible. One of the mechanisms are Events and Listeners which can be used to trigger actions during the publishing process. To automatically populate an image object with data from an external source, we can hook into the event before publishing and make a call to a remote API.

Once the content object is updated with the description provided by the Azure Computer Vision API, it lives locally and can be used by our built-in Search Engine. Note that the results vary a lot and may result in blank or misleading results for some images. This is why I would not recommend exposing the descriptions directly to the public without curation.

However, even in the complete absence of human intervention the generated descriptions make for good metadata that help in finding images from a media library as demonstrated:

For the more technical readers, the proof-of-concept code for the demo video is up on GitHub. For production use, you should perform the API calls asynchronously (for example with Symfony Messenger) so that slowdowns or outages of the API service don't disrupt the content editing experience. This way the job will run in the background after publishing.

It is easy to try how the API works on a demo page where you can easily upload an image using a browser. For production use, you will need to sign up for an Azure account, which has a limited number of free API calls for testing. Before starting implementation, I recommend benchmarking other imaging APIs such as Clarifai, Vision AI from Google and Rekognition from AWS to see what works best for your use case and price point.

Integrating advanced image recognition technology is just one practical example of how extensibility is a key capability that a DXP should have. Learn more about other features that we think are important when choosing a DXP by downloading our free ebook: 5 Main Features of a Modern Digital Experience Platform

eZ Platform is now Ibexa DXP

Ibexa DXP was announced in October 2020. It replaces the eZ Platform brand name, but behind the scenes it is an evolution of the technology. Read the Ibexa DXP v3.2 announcement blog post to learn all about our new product family: Ibexa Content, Ibexa Experience and Ibexa Commerce